Container and hardware e-virtualization under one roof

Double Sure

The Proxmox Virtual Environment, or VE for short, is an open source project [1] that provides an easy-to-manage, web-based virtualization platform administrators can use to deploy and manage OpenVZ containers or fully virtualized KVM machines. In creating Proxmox, the developers have achieved their vision of allowing administrators to create a virtualization structure within a couple of minutes. The Proxmox bare metal installer will convert virtually any PC into a powerful hypervisor for the two most popular open source virtualization technologies, KVM and OpenVZ.

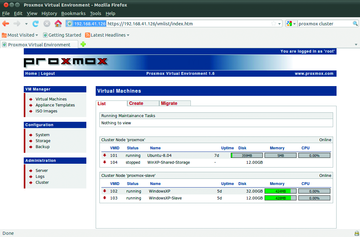

Additionally, the system lets you set up clusters comprising at least two physical machines (see the "Cluster Setup" box). One server always acts as the cluster master, to which you can assign other servers as nodes. Strictly speaking, this is not genuine clustering with load balancing, but the model does support centralized management of all the nodes in the cluster, even though the individual virtual machines in a Proxmox cluster are always assigned to one physical server. The clear-cut web interface facilitates the process of creating and managing virtual KVM machines or OpenVZ containers and live migration of virtual machines between nodes (Figure 1).

Figure 1: Proxmox VE supports creating and managing OpenVZ containers (Ubuntu 8.04) and virtualized KVM machines.

Figure 1: Proxmox VE supports creating and managing OpenVZ containers (Ubuntu 8.04) and virtualized KVM machines.

Cluster Setup

To set up a cluster, you will need to visit the command line. Proxmox provides its PVE Cluster Administration Toolkit pveca for the job. The command:

pveca-c

designates the local server as the cluster master; to discover the server's current status, you can type:

pveca-l

To add a second physical server, you need to log in locally, or via SSH; and then type:

pveca-a-h <I>IP of the Cluster Master<I>

to make the second server part of the cluster.

Offering the two most popular free virtualization technologies on a single system as a mega-virtualization solution gives administrators the freedom to choose the best virtualization solution for the task at hand. OpenVZ [2] is a resource container; whereas KVM [3] supports genuine hardware virtualization in combination with the right CPU. Both virtualization technologies support maximum performance for the guest system in line with the current state of the art.

OpenVZ

The OpenVZ resource container typically is deployed by hosting service providers wanting to offer their customers virtual Linux servers (vServers). The setup doesn't emulate hardware for the guest system but is based on the principle that any operating system comprises a number of active processes, a filesystem with installed software, storage space for data, and a number of device access functions, from the application's point of view. Depending on the scale of the OpenVZ host (node), you can run as many containers as you like in parallel. Each container is designed to provide what looks like a complete operating system with a runtime environment from the applications' point of view.

In the host context, containers are simply directories. All the containers share the kernel with the host system and can only be of the same type as the host operating system, which is Linux. An abstraction layer in the host kernel isolates the containers from one another and manages the required resources, such as CPU time, memory requirements, and hard disk capacity. Because each container only consumes as much CPU time and RAM as the applications running in it, and the abstraction layer overhead is negligible, virtualization based on OpenVZ is unparalleled with respect to efficiency. The Linux installation on the guest only consumes disk space. OpenVZ is thus always the best possible virtualization solution if you have no need to load a different kernel or driver in the guest context to access hardware devices.

Hardware Virtualizers

In contrast to this solution, hardware virtualizers offer more flexibility than resource containers with respect to the choice of guest system; however, the performance depends to a great extent on the ability to access the host system's hardware. The most flexible but slowest solution is complete emulation of privileged processor instructions and I/O devices, as offered by emulators such as Qemu without KVM. Hardware virtualization without CPU support, as offered by VMware Server or Parallels entails a performance hit of about 30 percent compared with hardware-supported virtualization.

Paravirtualizers like VMware ESX or Xen implement a special software layer between the host and guest systems that gives the guest system access to the resources on the host via a special interface. However, this kind of solution means modifying the guest system to ensure that privileged CPU functions are rerouted to the hypervisor, which means having custom drivers (kernel patches) on the guest.

Hardware-supported virtualization, which Linux implements at kernel level in Linux with KVM, also emulates a complete PC hardware environment for the guest system, so that you can install a complete operating system with its own kernel. Full virtualization is transparent for the guest system, thus removing the need to modify the guest kernel or install special drivers on the guest system. However, these solutions do require a CPU VT extension (Intel-VT/Vanderpool or AMD V/Pacifica), which make the guest system believe that it has the hardware all to itself, and thus privileged access, although the guest systems are effectively isolated from doing exactly that. The host system or a hypervisor handles the task of allocating resources.

One major advantage compared with paravirtualization is that the hardware extensions allow you to run unmodified guest systems, and thus support commercial guest systems such as Windows. In contrast to other hardware virtualizers, like VMware ESXi or Citrix Xen Server, KVM is free, and it became an official Linux kernel component in kernel version 2.6.20.

KVM Inside

As a kind of worst-case fallback, KVM can provide a slow but functional emulator for privileged functions, but it provides genuine virtual I/O drivers (PCI Passthrough) for most guest systems and benefits from the VT extensions of today's CPUs. The KVM driver CD [4] offers a large selection of virtual I/O drivers for Windows. Most distributions provide virtual I/O drivers for Linux.

The KVM framework on the host comprises the /dev/kvm device and three kernel modules: kvm.ko, kvm-intel, or kvm-amd for access to the Intel and AMD instruction sets. KVM extends the user and kernel operating modes on VT-capable CPUs adding a third, known as guest mode, in which KVM starts and manages the virtual machines, which in turn see a privileged CPU context. The kvm.ko accesses the architecture-dependent modules, kvm-intel and kvm-amd directories, thus removing the need for a time-consuming MMU emulation.