« Previous 1 2 3 4 Next »

Secure microservices with centralized zero trust

Inspired

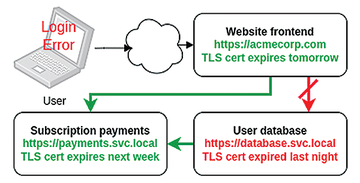

How often have you been caught out by an application that has suddenly and catastrophically failed, only to discover deep in the logs of some unexciting but critical component a message – such as Unable to connect to https://database.service.local – expired TLS certificate – and smacked your head in frustration at how such a preventable failure mode brought down your carefully crafted microservices-based architecture? With an expired TLS certificate, your database could no longer prove its identity or play a part in a zero trust network (Figure 1).

Figure 1: Expiry of a single service's Transport Layer Security (TLS) certificate makes the whole application unusable.

Figure 1: Expiry of a single service's Transport Layer Security (TLS) certificate makes the whole application unusable.

Certificate expiry is just one example of how the human factors involved in identity management can be high maintenance, prone to error, and the source of vulnerabilities. Yet, cast-iron workload identities are, by definition, the foundation of zero trust application architecture. No two workloads should trust each other's legitimacy simply because they both happen to be running on the same physical host or within the same network perimeter. Instead, each TCP session is validated by both participants, and no data is exchanged without each side knowing for sure who is at the

...Buy this article as PDF

(incl. VAT)