Link aggregation with kernel bonding and the Team daemon

Battle of Generations

No matter how many network interfaces a server has and how the admin combines them, hardware alone is not enough. To turn multiple network cards into a true failover solution or a fat data provider, the host's operating system must also know that the respective devices work in tandem. If you are confronted with this problem on Linux, you usually use the bonding driver in the kernel; however, an alternative is libteam in user space, which implements many other functions and features, as well.

A Need for NICs

Many people who see a data center from the inside for the first time are surprised by the large number of LAN ports on an average server (Figure 1): Virtually no server can manage with just one network interface, for several reasons.

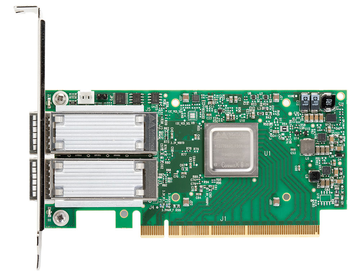

Figure 1: Network cards with multiple ports for redundancy or performance purposes are the rule rather than the exception in data centers.

Figure 1: Network cards with multiple ports for redundancy or performance purposes are the rule rather than the exception in data centers.

- The owner might use several network interfaces to increase redundancy. In such a setup, the server is connected to separate switches by different network ports, so that the failure of one network device does not take down the server.

- The admin might want to

Use Express-Checkout link below to read the full article (PDF).

Buy this article as PDF

Express-Checkout as PDF

Price $2.95

(incl. VAT)

(incl. VAT)