« Previous 1 2 3 4 Next »

Building a defense against DDoS attacks

One Against All

Securing Websites

Many websites offer a members-only area that can be accessed by password. As with an SSH service, the attacker tries typical username and password combinations; this method will eventually lead to success unless a form of website protection puts a stop to it.

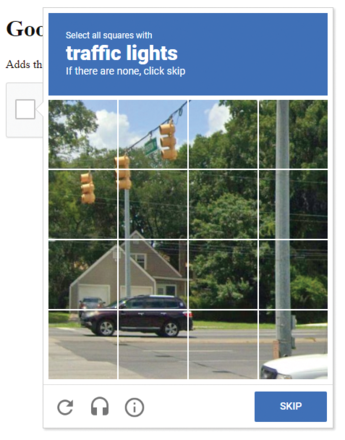

The website protector works like this: It sends a work order to the client's web browser and only displays the requested web content once the task has been completed. The activity can vary from a simple I am not a robot checkbox to, say, Select all squares with traffic lights . Other watchdogs package the task in JavaScript, which the browser processes without human intervention (proof of work). In all cases, access to the web service is slowed down so that a DDoS attacker can make significantly fewer requests per second. The trick with web protection is that the client, not the server, is exposed to high load. The client receives the difficult task and the server only checks whether the result is correct.

This DDoS protection does not require you to tinker with the existing HTML code. The web protector works as a benign man-in-the-middle and inserts its robot checks upstream of the actual web pages. Technically, this takes the form of a reverse proxy that receives and analyzes the web requests of all clients. Is this client submitting an unusually high number of requests? Then a web challenge is issued that human visitors have to answer.

The reverse proxy is a piece of software that accepts HTTP requests but has no content itself. For the response, the reverse proxy accesses another web server and delivers the HTML code as a proxy. As an intermediary in the data stream, the proxy can inject all sorts of things into the HTML lines, such as captchas.

Captchas with vDDoS

In addition to commercial providers with professional web protection, you can find free applications on GitHub, such as the vDDoS software [7], which presents captchas to its clients. Every web visitor has probably stumbled across this kind of task and had to click on the right photos from a selection (Figure 3). This activity is intended to distinguish human visitors from scripts.

Figure 3: With the help of an image puzzle in vDDoS, the website checks whether a human or a script wants access.

Figure 3: With the help of an image puzzle in vDDoS, the website checks whether a human or a script wants access.

The tool can be installed on Linux with just a few lines:

wget https://raw.githubusercontent.com/duy13/vDDoS-Protection/master/latest.sh chmod +x latest.sh ./latest.sh

All components are then available under /vddos on the local filesystem. In the best open source manner, the developers of vDDoS do not code everything from scratch but use existing software with a free license: Nginx for the web server, Gunicorn as the web gateway, and Flask as the Python framework. You need to describe your websites and the desired protection method in the configuration.

In Listing 1, vDDoS looks like an HTTP/S server (Listen

column) with different levels of protection (Security

) when viewed from the outside; it addresses several servers in the background (Backend

). The starting signal is given by the vddos start command. vDDoS then takes off and offers the configured websites from its IP address – with built-in DDoS protection.

Listing 1

vDDoS as a Reverse Proxy

Website Listen Backend Cache Security SSL-Prikey SSL-CRTkey default http://0.0.0.0:80 http://10.1.1.84:80 no 5s no no www.example.net https://0.0.0.0:443 http://10.1.1.84:80 no 307 /vddos/ssl/example.key /vddos/ssl/example.crt login.example.net https://0.0.0.0:443 http://10.1.1.85:80 no captcha /vddos/ssl/example.key /vddos/ssl/example.crt

Search Engines and Crawlers

Whichever DDoS protection you choose, you do not want to block the crawlers of the major search engines. If the snooping bot from Google, Microsoft, or DuckDuckGo encounters a JavaScript challenge or Fail2Ban strikes, it will simply stop indexing your site. Fortunately, the operators provide all the IP addresses under which their bots map out the Internet. You need to add these addresses to your personal whitelist to make sure that nothing stands in the way of the search bots.

« Previous 1 2 3 4 Next »

Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.