« Previous 1 2 3 Next »

Storage across the network with iSCSI and Synology DiskStation Manager

Across the Block

Storage Manager and Pool

Regardless of your hardware setup, you are now at the point where the system portion of your server is in place, and you could freeze it in this state. The next step will be to configure all of the storage on which you've spent your hard-earned dollars. In that respect, the last steps server-side are to configure the storage pool and volume and map it to a newly created iSCSI qualified name (IQN), which, simply put, will be a unique hardware identifier. Following this mapping, the NAS will start accepting incoming connections on the default iSCSI port, which is TCP/3260.

The combination of that IQN and TCP/3260 is the socket that allows client-server communication. It is important to understand that every party member of the iSCSI dance needs to have one of these addresses. Both server and client require a unique IQN properly configured on both ends to be able to attach (and manage) remote devices from the Debian system.

To do so, you first need to access your server's storage manager, which, in my case, is located at the top left-hand side of the DSM Main Menu (Figure 2). Next, create both a storage pool and a volume using the built-in guidance wizard, which prompts you to select the preferred RAID type. In my case, JBOD, RAID 0, RAID 1, RAID 5, RAID 6, and even a proprietary solution called SHR were available.

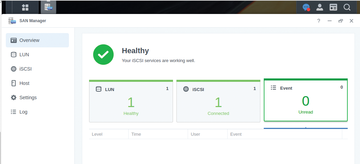

Figure 2: Victory! The SAN Manager now shows that all configurations are correctly in place on the NAS.

Figure 2: Victory! The SAN Manager now shows that all configurations are correctly in place on the NAS.

For data flow and business continuity, I opted to use RAID 6, selecting all four of my drives to create one big happy pool and then clicked Next . I was then asked to specify the maximum size, for which, easily enough, a button is provided to hit the max, after which the desired storage pool was then created successfully. Depending on the size and speed of your drives, this step will take from a few minutes up to several hours to complete its creation.

Once finished, the volume shows up under the storage pool of your device. You then have to bind this newly created storage pool with an existing iSCSI logical unit number (LUN) from the SAN Manager icon located under the top left-hand menu.

From the SAN Manager page, you will also be able to confirm in the Overview section (Figure 2) the status of any currently existing (or not) LUNs. This dashboard also helps end-users get a quick assessment of the number of connected LUNs, alongside any possible errors that might be plaguing their NAS. Clicking on the iSCSI entry in the left sidebar of the SAN Manager will allow you to launch a creation wizard that lets you create a new LUN. The reason behind this step is to initiate a new LUN, to which you can later attach your newly created storage volume comprising your (in my case, four) drives.

Because Synology has launched itself into the storage business, it is possible your drives will not be on their compatibility list (as mine weren't). However, that will not be a concern; rest assured that you can safely move forward ignoring anything having to do with drive compatability.

Once this step is complete, you should see the newly created iSCSI target displayed on the SAN Manager dashboard. Rejoice! Again, as quickly as this can happen with state-of-the-art hardware, older systems might require much more time before your LUN is created and made accessible.

When the build process finally completes, the NAS displays the much-anticipated LUN. The last thing you'll need to do in the Edit | Mapping tab in DSM is bind the iSCSI target by selecting (and applying) the IQN target to the recently built LUN. In my case, none existed, so I had to create one by following the user interface's creation wizard (Figures 3 and 4).

Client

Back on the 'bookworm' system, I want to deploy the open-iscsi package [3] (the RFC 3720 iSCSI implementation for Linux). To create a secure infrastructure, couple it by installing the cryptsetup package, as well. After the iSCSI protocal has successfully attached the remote drive to the client (Listings 2 and 3), I format it with LUKS [4], followed by the ext4 filesystem. Only then can I really start relying on my entrusted mountpoint. To install these packages, issue the command:

$ sudo apt install cryptsetup open-iscsi -y

Once both packages are installed and you are back at the prompt, you can truly begin to profit from your iSCSI journey (Figure 5). Issuing the lsblk command from the client reveals the kind of direct storage units available on this node. As you can see from Listing 1 no remote units are currently attached.

Listing 1

Client System Before Attaching an iSCSI Device

$ uname -a Linux DANSBOX 6.8.0-35-generic #35-Ubuntu SMP PREEMPT_DYNAMIC Mon May 20 15:51:52 UTC 2024 x86_64 x86_64 x86_64 GNU/Linux $ lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS sda 8:0 0 25G 0 disk |-sda1 8:1 0 1M 0 part |-sda2 8:2 0 25G 0 part /var/snap/firefox/common/host-hunspell

In my case, I also want to configure my client's firewall to ensure that traffic goes through port TCP/3260:

$ sudo ufw allow 3260/tcp

Because I set a static IP on my device earlier, I can also whitelist the entire host:

$ sudo ufw allow from 192.168.1.10

Perhaps the most secure method is to confine it to the static IP and port, instead of including the entire host:

$ sudo ufw allow from 192.167.1.10/24

to any port 3260

Implementing all of these steps and restarting the service with

$ sudo systemctl status iscsi.service

should give you the green light and show that the service is up and running, as you can see in Listing 2, which also confirms the IQN served by the NAS.

Listing 2

Forcing an iSCSI Connection

$ sudo /sbin/iscsiadm -m node -T iqn.2000-01.com.synology:MYDEVICE.Target-1.4d9e3bda2ee -p 192.168.1.10 -l Logging in to [iface: default, target: iqn.2000-01.com.synology:MYDEVICE.Target-1.4d9e3bda2ee, portal: 192.168.1.10,3260] Login to [iface: default, target: iqn.2000-01.com.synology:MYDEVICE.Target-1.4d9e3bda2ee, portal: 192.168.1.10,3260] successful. $ lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS sda 8:0 0 25G 0 disk |-sda1 8:1 0 1M 0 part |-sda2 8:2 0 25G 0 part /var/snap/firefox/common/host-hunspell sdb 8:16 0 7.2T 0 disk

The time has come to establish the first iSCSI session and attach the LUN. To do so, send a discovery command with the iscsiadm binary:

$ sudo /sbin/iscsiadm --mode discovery --op update --type sendtargets --portal 192.168.1.10

You can unpack this command by reading the iscsiadm man page [5]. Briefly, it allows you to discover the IQN of your server and, therefore, allows you to attach the unit to the client. You can confirm that you do have a new storage unit by replaying the lsblk command (Figure 6).

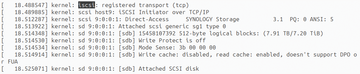

Figure 6: After all has been put into place successfully, the client system will automatically recognize the iSCSI drive at the next client boot. Moving forward, the dmesg command will show the remote attachment of a drive being automatically made by the client. Listing 3 confirms the new availability of a 7.2TB storage unit.

Figure 6: After all has been put into place successfully, the client system will automatically recognize the iSCSI drive at the next client boot. Moving forward, the dmesg command will show the remote attachment of a drive being automatically made by the client. Listing 3 confirms the new availability of a 7.2TB storage unit.

Listing 3

Confirming iSCSI Status

START<<<

$ sudo systemctl status iscsi.service

open-iscsi.service - Login to default iSCSI targets

Loaded: loaded (/usr/lib/systemd/system/open-iscsi.service; enabled; preset: enabled)

Active: active (exited) since Thu 2024-07-04 20:55:15 EDT; 5 days ago

Docs: man:iscsiadm(8)

man:iscsid(8)

Main PID: 1696 (code=exited, status=0/SUCCESS)

CPU: 10ms

Jul 04 20:55:15 DANSBOX systemd[1]: Starting open-iscsi.service - Login to default iSCSI targets...

Jul 04 20:55:15 DANSBOX iscsiadm[1646]: Logging in to [iface: default, target: iqn.2000-01.com.synology>

Jul 04 20:55:15 DANSBOX iscsiadm[1646]: Login to [iface: default, target: iqn.2000-01.com.synology:MYDEVICE>

Jul 04 20:55:15 DANSBOX systemd[1]: Finished open-iscsi.service - Login to default iSCSI targets.

>>>END

Because I am working in a secure environment, I will go ahead and create a LUKS container:

$ sudo cryptsetup -y -v luksFormat /dev/sdb

Once (or while) this process com- pletes, you need to make sure everything is in place to mount this new drive (e.g., creating a new path; changing its ownership, possibly to your unprivileged user; and setting permissions to 777). When LUKS formatting is complete and your system has everything needed to host the mount, you can mount the LUKS partition by issuing (in my case),

$ sudo cryptsetup luksOpen /dev/sdb BU

which associates the device /dev/sdb with the label BU; moving forward, you can reference the device by the more simplistic label name instead of having to work with its longer name. Next, format the LUKS container with the filesystem of your choice. In my case, I opted for the venerable ext4:

$ sudo mkfs -t ext4 /dev/sdb

Of course, LUKS is agnostic to whichever filesystem layer you end up choosing. Only at this point will everything be configured properly on the client system. You can also become better acquainted with your cryptographic footprint with,

$ sudo cryptsetup -v status BU

or create more verbose output with

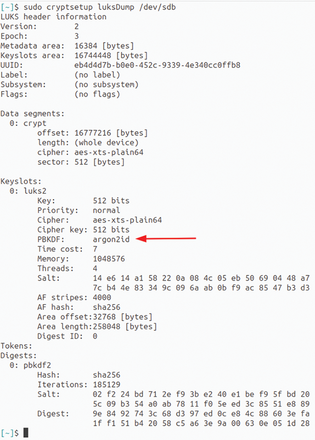

$ sudo cryptsetup luksDump /dev/sdb

as shown in Figure 7.

Figure 7: When dealing with any LUKS container, be sure the password-based key derivation function (PBKDF) value is set to argon2id; otherwise, it will be more vulnerable to brute force attacks.

Figure 7: When dealing with any LUKS container, be sure the password-based key derivation function (PBKDF) value is set to argon2id; otherwise, it will be more vulnerable to brute force attacks.

Client – Daily Interaction

All iSCSI session connection specifics are displayed by the command:

$ sudo /sbin/iscsiadm-m node -o show

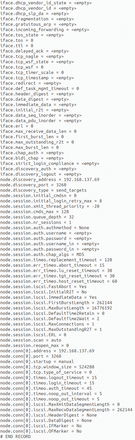

Figure 8 shows a lot more information from the iSCSI sessions' properties located under the server folder:

/etc/iscsi/nodes/iqn.2000-01.com.synology:MYDEVICE.Target-1.4d9e3bda2ee/ 192.168.1.10,3260,1/default

Figure 8: The iscsiadm command issued as the root user can show more results than are contained in the default configuration file located under the /etc/iscsi/nodes/ path.

Figure 8: The iscsiadm command issued as the root user can show more results than are contained in the default configuration file located under the /etc/iscsi/nodes/ path.

If you do not want to use a terminal to access the remote drive, you can use the MATE Caja file manager. Given it does not display drives in the left tree panel, you should issue commands from Listing 4.

Listing 4

Discover Running Sessions

$ sudo /sbin/iscsiadm -m node -T iqn.2000-01.com.synology:MYDEVICE.Target-1.4d9e3bda2ee -p 192.168.1.10 -l Logging in to [iface: default, target: iqn.2000-01.com.synology:MYDEVICE.Target-1.4d9e3bda2ee, portal: 192.168.1.10,3260] Login to [iface: default, target: iqn.2000-01.com.synology:MYDEVICE.Target-1.4d9e3bda2ee, portal: 192.168.1.10,3260] successful. $ sudo iscsiadm -m session [~] tcp: [15] 192.168.1.10:3260,1 iqn.2000-01.com.synology:MYDEVICE.Target-1.4d9e3bda2ee (non-flash)

If you need to detach from a block-level-mounted device, first use the umount command to detach the ext4 partition from your system, as you would generally do, and then close the LUKS container with:

$ sudo /usr/sbin/cryptsetup luksClose BU

You can then conclude the removal of the LUN from your system by closing the iSCSI session:

$ sudo /usr/sbin/iscsiadm -m node-T iqn.2000-01.com.synology: MYDEVICE.Target-1.4d9e3bda2ee -p 192.168.1.10:3260 -u

The entire process is shown in Listing 5.

Listing 5

Unmounting Encrypted iSCSI

$ sudo umount /BU $ sudo cryptsetup luksClose BU $ sudo /usr/sbin/iscsiadm -m node -T iqn.2000-01.com.synology:MYDEVICE.Target-1.4d9e3bda2ee -p 192.168.1.10:3260 -u Logging out of session [sid: 14, target: iqn.2000-01.com.synology:MYDEVICE.Target-1.4d9e3bda2ee, portal: 192.168.1.10,3260] Logout of [sid: 14, target: iqn.2000-01.com.synology:MYDEVICE.Target-1.4d9e3bda2ee, portal: 192.168.1.10,3260] successful. $ sudo iscsiadm -m session iscsiadm: No active sessions. $ df -H tmpfs 411M 1.4M 410M 1% /run /dev/sda2 27G 13G 13G 50% / tmpfs 2.1G 0 2.1G 0% /dev/shm tmpfs 5.3M 0 5.3M 0% /run/lock tmpfs 411M 193k 411M 1% /run/user/1000

« Previous 1 2 3 Next »

Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.