« Previous 1 2 3 Next »

Detecting system compromise

Foundational Security

Good Integrity Is Runtime Integrity

What then constitutes an adequate measurement? One way to address that question is to ask which parts of the system need measurements: the operating system, the system software that lives outside of the operating system, the wide array of applications and supporting software (e.g., databases and configurations)? You might need to consider including any number of supporting systems with their own software in some integrity reports. The details of what, how, and from where measurements are taken is a complex subject. The answer ultimately depends on the use case and potential consequences of compromise. In any event, how attackers compromise system integrity must inform the decision.

Another way to address the question is to consider what should be included in a measurement of any component that is determined to need measurement. In this one area a runtime integrity measurement can drastically improve measurements. Traditionally, integrity measurement has been done by measuring the image of the software resident on a disk before it is run or the executable portion of the in-memory image of the software just before it runs. This static measurement uses a mathematical function called a hash that produces a unique number that can be used like a fingerprint in an attestation. If the hash matches the expected value, the software is OK.

This method of measurement is core to secure boot technologies. Although secure boot can be an important technology to support system integrity when used to establish the security of an initial software environment, it generally is inadequate for true system integrity. These static load-time measurement techniques fall short in two important ways.

The first way is that the image used in the measurement does not represent the entire image that can affect system security. Once software begins execution, a tremendous amount of relevant integrity information is found in the dynamic state of the running software, including the processor state that indicates from which portion of memory the processor is actually executing, as well as all of the data structures used during software execution. In the case of the operating system, this dynamic state affects every aspect of a system's management of resources.

Many well-known examples of system compromises have resulted from gaining privileged execution and then corrupting system state. Static load-time measurements can never incorporate this information because it doesn't yet exist when measurement is done. Runtime integrity can produce superior results by extending the notion of system integrity measurement to include all dynamic states.

Measurement is performed when the system is fully running, freeing it to inspect every corner of the execution environment for possible corruption. Additionally, most modern systems employ some sort of dynamic code rewriting as the software begins execution, leaving static hashing techniques no longer able to generate predictable hash values over the software once running. Runtime integrity mechanisms can be instrumented to deal with this complication.

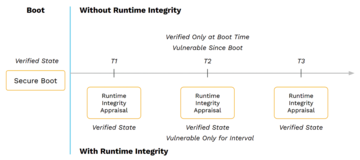

The second way static measurement techniques fall short is that the instant the measurement result is produced, it begins to be stale. Any inference about system integrity is confined to conclusions based on information collected when the software began execution. Any changes in system state since, including malicious changes resulting from attack, will not be reflected. Runtime integrity improves on the situation because it can be run any time and as often as desired to refresh the measurement, capturing fresh measurements of the entire dynamic state (Figure 3). This method, in effect, resets the window of vulnerability from boot time to the time of the most recent measurement.

Runtime integrity measurement works by examining data structures for specific data values deemed relevant to system integrity. Configuration files are created from prior analysis of the source code for each target system. The configurations identify which data structures need examination and the offsets in each to the desired data. The runtime integrity system creates a measurement by traversing the system image in memory, following data structure links to discover new instances of each identified structure, and then recording the configured values from the specified offsets. When completed, all or part of the measurement, according to the use case, is sent off for appraisal in an attestation.

Nothing Good Is Free

Runtime integrity is a big step forward for integrity systems that can yield much better results. Like most beneficial things, it does not come without cost. Runtime integrity measurement is a complex process that is not without overhead in time and system resources. Although you might be tempted to use runtime integrity on every conceivable part of the system and as frequently as physically possible to maximize security value, more limiting choices can be made to minimize the effect on a system while likely providing sufficient results.

With the caveat that these choices can't be made in a vacuum, absent any notion of use cases, a proven strategy is to focus measurement on those portions of the executing software attractive to attackers – namely, those portions that can directly affect execution control flow and data structures that affect decisions about control flow.

Runtime integrity also complicates attestation and appraisal. Although runtime integrity measurement reports can contain a plethora of information about the exact nature of a compromise, larger integrity reports need to be included in attestations. Likewise, more complex reports require more complex appraisals that need to make sense of more measurement data. Appraisal preprocessing strategies that produce partial results can be employed to reduce attestation costs, but appraisal can be a daunting challenge.

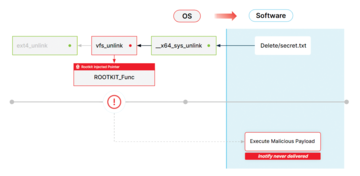

As an example of how runtime integrity can be tailored to focus on high-value data structures in the kernel, consider how the Linux kernel handles file operations such as read, write, or delete. Linux abstracts away the device driver operations that implement these functions for specific devices. For each active file, it maintains as part of its dynamic runtime state a data structure of file pointers for each device operation. The file operations can thus be accessed in a generic way without regard to the specifics of different devices.

This sort of elegant programming solution provides opportunity for attackers to hide malicious code in the kernel once it is compromised by replacing the values in the file operations structure with pointers to inserted malicious code (Figure 4). In this example, an attacker might replace the pointer to the file's delete operation that can then be easily executed at some future time by simply deleting a file.

Because analysis of the Linux kernel has identified these structures as relevant to integrity, the runtime integrity system is configured to extract the relevant pointer information from these structures. The measurement process will discover every instance of this data structure and record the active function pointer values for later comparison with the expected values. The runtime integrity system doesn't need to know how the values were compromised. The fact that there might be variance from known expected values will allow detection regardless of the means the attacker used to corrupt the system. The same technique is employed for every other type of kernel data structure identified in the a priori analysis.

A Word on Appraisal

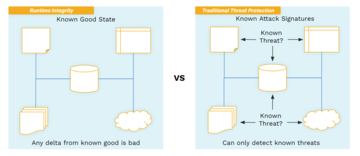

Appraisal of runtime integrity measurements can be made into a constraint-checking problem. Constraints on the data that indicate the system is unchanged are identified, reducing appraisal to checking whether the attested measurement data satisfies them. Failures indicate where system compromises have occurred. This novel idea allows runtime integrity to function by detecting deltas from a system's known good state instead of only being able to report the recognition of previously seen bad states. Consequently, runtime integrity can detect compromises resulting from existing and never before seen system attacks (Figure 5).

Revisiting the above file operation example, appraisal of the measurement data collected about file operations can be expressed as constraints on the values of the recorded function pointers. Constraints are defined that require a match to expected values. Not only will the constraint-checking process indicate a failed appraisal, but it will also identify specific data structure nodes, along with the unexpected values where any corruption has occurred, which is invaluable information for any forensic analysis of an attack.

The most important innovation that makes runtime integrity tractable in the face of extremely complex and changing systems is an efficient baselining process, wherein a running system in a known good state, preferably pristine, is measured to produce data that can be used to support both measurement and appraisal of a running system by identifying specific values for key data that can be unique for every running system. For measurement, baselining helps identify initial locations of key data structures from which the measurement process can discover the rest. For appraisal, baselining supports constraint generation by identifying the known good values used in the constraints.

Again, revisiting the file operations example, the baselining process allows both the measurement and appraisal processes to work by identifying where in the kernel the measurement process can locate the file operation structures, as well as the correct locations of the device file operation functions.

« Previous 1 2 3 Next »

Buy this article as PDF

(incl. VAT)