« Previous 1 2 3 Next »

Build and host Docker images

Master Builder

Null and Void

When building Docker images, you start with a working directory that is probably empty, so as the first step, you need to create a new folder, which won't stay empty long, because of the command you run in the very next step,

mkdir nginx touch .dockerignore

to create a file that acts as an exclusion list for files that Docker doesn't need to consider when building the image.

The rest of the process is a little more complicated and requires some knowledge of Docker images. Basically, you have several options at this point. You can either use a prebuilt base image for your image or build it yourself.

Remember that a running container on a system initially only contains a filesystem. The Linux kernel runs various functions (namespaces, cgroups) to move the filesystem to a sort of isolated area of the system and operates it there. Unlike in full virtualization or paravirtualization mode, a Docker image does not require its own kernel. However, it does have to contain all the files that the desired program needs to run. After all, the standard host filesystem is a no-go area later for the active container.

This lockout can be removed by bind mounts and volumes later; however, you will always want to build the container such that it has no dependencies on software stored externally. The premise is that a Docker image is always self-contained; that is, it has no dependencies on the outside world.

Virtually every Docker image therefore contains a reasonably complete filesystem of a runnable Linux system. How complete the filesystem is depends strongly on the application and its dependencies. Some developers intuitively go for tools like debootstrap and build their own basic systems, but this idea is not particularly good. Even a basic installation of Debian or Ubuntu today includes far more than you really need for a container. Additionally, completely DIY images also need to be completely self-maintained. Depending on the situation, this can involve a serious amount of work.

Docker saw this problem coming and practically eliminated it with a small hack. Instead of keeping the entire contents of the container image locally, Docker uses the FROM command when building the image. The command draws on a public image on Docker Hub as the basis for the image to be created and only adds the components requested by the developer.

All major distributors maintain their own micro-images for container building with their own distribution and make them available on Docker Hub. The same applies to Red Hat Enterprise Linux (RHEL), SUSE, Ubuntu, Debian, Arch Linux, and the particularly lean Alpine Linux, which is optimized for container operation. Distributors are very good at building mini-images of their own distributions and can do it far more efficiently than an inexperienced end user.

Distributors regularly maintain their images, as well. When a new version of a base image is released, you just need to rebuild your own image on the basis of the new image to eliminate security or functionality issues. One practical side effect is that the local working directory for image building remains easy to understand and clean.

Another great feature of container building now comes into play: During the build, CMD can be used to run commands that, for example, add packages to the distributor's base image. The content that the developer needs to contribute to their own application is therefore typically just the application itself and its files, along with the dependencies that are not available in packaged form for the base image you are using.

An Example

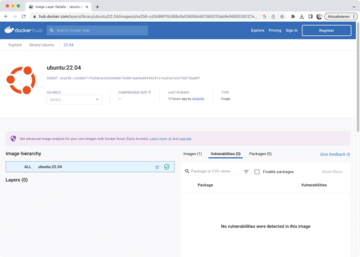

After all this theory, it's time to build the first container. I deliberately kept the following example as simple as possible. It describes building a container with Ubuntu 22.04 as the basis (Figure 1) running NGINX, with the web server serving up a simple HTML page. The file shown in Listing 2, named Dockerfile, is one of the two basic ingredients required. To experienced container admins, the contents may seem less than spectacular, but if you haven't built a container yet, you may be wondering what each command does.

Listing 2

NGINX Dockerfile

# Pull the minimal Ubuntu image FROM ubuntu # Install Nginx RUN apt-get -y update && apt-get -y install nginx # Copy the Nginx config COPY default /etc/nginx/sites-available/default # Expose the port for access EXPOSE 80/tcp # Run the Nginx server CMD ["/usr/sbin/nginx", "-g", "daemon off;"]

Figure 1: A preconfigured base image from one of the major distributors is recommended for building your container. A newcomer is unlikely to be able to put together a leaner image without compromising some of the functionality.

Figure 1: A preconfigured base image from one of the major distributors is recommended for building your container. A newcomer is unlikely to be able to put together a leaner image without compromising some of the functionality.

FROM is the previously mentioned pointer to a base image by a provider – Ubuntu in this case. If you prefer a specific version, you can specify it after a colon (e.g., ubuntu:22.04). The RUN command initiates a command that Docker executes during the build within the downloaded base image. In the example, it installs the nginx

package. COPY copies a file from the local folder to the specified location in the image. The example assumes that a file named default is in the build folder and later will be in the NGINX site configuration in the image (Listing 3).

Listing 3

File default

server {

listen 80 default_server;

listen [::]:80 default_server;

root /usr/share/nginx/html;

index index.html index.htm;

server_name _;

location / {

try_files $uri $uri/ =404;

}

}

Again in Listing 2, EXPOSE instructs the runtime environment to expose port 80 of the running container to the outside world to allow access. Docker invokes CMD to start the container. In the example, it calls NGINX with daemon mode disabled so that stdout remains open; otherwise, the runtime environment would terminate the container immediately.

Next is building and launching the image:

$ docker build . -t lm-example/nginx $ docker run -d -p 80:80 am-example/nginx

The first command triggers the process. You need to call it directly from the build directory. After doing so, you will find the finished image in the local Docker registry. The second command launches the image for test purposes. If docker ps displays the NGINX container afterward, the package build was successful (Figure 2).

Figure 2: Assuming the build process worked, the container from the example can be launched and creates a working NGINX instance. © Haidar Ali [1]

Figure 2: Assuming the build process worked, the container from the example can be launched and creates a working NGINX instance. © Haidar Ali [1]

More Fun with CI/CD

Granted, the example shown is unspectacular and leaves out many package building options, as well as options for running NGINX in a more complex configuration. For example, in real life, an NGINX instance always needs an SSL certificate along with the matching key.

The usual way to solve the problem in Docker is to subcontract a volume to the container at runtime where the respective files reside. However, for this to work as intended, you need to preconfigure NGINX in the container appropriately. You can use a static configuration for this, although you would need to modify the Dockerfile accordingly. Alternatively, you can use variables to pass in the parameters in the shell environment from which you launch the container as the admin. In the Dockerfile, the developer would then define the variable with an ENV statement and access it in the file itself with $<VARIABLE>.

However, none of this hides the fact that the example is quite rudimentary. In everyday life, especially with more complex applications, you are hardly going to get away with such a small number of commands, not to mention the problems that arise from maintaining the image. For example, the image built here has not yet been published. Tests to check the functionality of the image automatically are also not planned to date.

All of this can be changed relatively quickly. The magic words are continuous integration and continuous development or automation and standardization of the image build and any testing. For example, an image developer wanting to rebuild an image just checks a new version of the Dockerfile into Git, and Git handles the rest automatically. When done, the new image is made available on Docker Hub and can be used.

Of course, the number of ready-made CI/CD solutions for Docker is now practically approaching infinity, not least because Kubernetes also plays a significant role in this business and has been something of a hot topic for the IT industry as a whole for years. No longer just a matter of building individual images, the goal is to create complete application packages that find their way in a fully automated manner into the Kubernetes target instance at the end of a CI/CD pipeline and replace the workload running there without downtime.

You don't have to spend big money to implement CI/CD with Docker. GitHub is the obvious choice. It has comprehensive CI/CD integration for Docker, including the option of automatically uploading the finished images to Docker Hub.

Initially, much like this example, you have an almost empty working folder with a Dockerfile and possibly the required additional files. You first add it to the Git version management system and then upload the repository to GitHub. For the directory, you then need to define the DOCKERHUB_USERNAME environment variable and a personal access token (PAT) in the DOCKERHUB_TOKEN variable. Next, add an action to your directory, which is an entire workflow in this example. For example, the .github/workflows/main.yml file in the repository might look like Listing 4.

Listing 4

Workflow Config for GitHub

name: ci

on:

push:

branches:

- "main"

jobs:

build:

runs-on: ubuntu:22.04

steps:

-

name: Checkout

uses: actions/checkout@v3

-

name: Login to Docker Hub

uses: docker/login-action@v2

with:

username: ${{ secrets.DOCKERHUB_USERNAME }}

password: ${{ secrets.DOCKERHUB_TOKEN }}

-

name: Set up Docker Buildx

uses: docker/setup-buildx-action@v2

-

name: Build and push

uses: docker/build-push-action@v4

with:

context: .

file: ./Dockerfile

push: true

tags: ${{ secrets.DOCKERHUB_USERNAME }}/am-example:latest

Once the file is in the directory, any changes checked into the repository will trigger an automatic rebuild of the image by GitHub, which will then use the given credentials to check the image into Docker Hub. Once in place on Docker Hub, the finished image can itself become part of CI/CD pipelines that, for example, control deployment within Kubernetes.

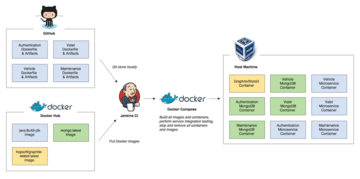

Mind you, GitLab and GitHub are just two of countless vendors trying to make a living with Docker CI/CD. Jenkins, the classic CI/CD tool (Figure 3), is also very much alive in this environment, as are many others.

« Previous 1 2 3 Next »

Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.