« Previous 1 2

CPU affinity in OpenMP and MPI applications

Bindings

numactl

One key tool for pinning processes is numactl [8], which can be used to control the NUMA policy for processes, shared memory, or both. One key thing about numactl is that, unlike taskset, you can't use it to change the policy of a running application. However, you can use it to display information about your NUMA hardware and the current policy (Listing 5). Note for this system, SMT is turned on, so the output shows 64 CPUs.

Listing 5

numactl

$ numactl --hardware available: 1 nodes (0) node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 node 0 size: 64251 MB node 0 free: 60218 MB node distances: node 0 0: 10

The system has one NUMA node (available: 1 nodes), and all 64 cores are associated with that NUMA node. Because there is only NUMA node, the node distances from NUMA node 0 to NUMA node 0 is listed as 10, which indicates it's the same NUMA node. The output from the command also indicates it has 64GB of memory (node 0 size: 64251 MB).

The advantages of numactl come from its ability to place and bind processes, particularly in relation to where memory is allocated, for which it has several "policies" that are implemented as options to the command:

- The

--interleave=<nodes>policy has the application allocate memory in a round-robin fashion on "nodes." With only two NUMA nodes, this means memory will be allocated first on node 0, followed by node 1, node 0, node 1, and so on. If the memory allocation cannot work on the current interleave target node (nodex), it falls back to other nodes but in the same round-robin fashion. You can control which nodes are used for memory interleaving or use them all:

-----------text04 $ numactl --interleave=all application.exe

This example command interleaves memory allocation on all nodes for application.exe. Note that the sample system in this article has only one node, node 0, so all memory allocation uses it.

- The

--membind=<nodes>policy forces memory to be allocated from the list of provided nodes (including thealloption):

-----------text05 $ numactl --membind=0,1 application.exe

This policy causes application.exe to use memory from node 0 and node 1. Note that a memory allocation can fail if no more memory is available on the specified node.

- The

cpunodebind=<nodes>option causes processes to run only on the CPUs of the specified node(s):

-----------text06 $ numactl --cpunodebind=0 --membind=0,1 application.exe

This policy runs application.exe on the CPUs associated with node 0 and allocates memory on node 0 and node 1. Note that the Linux scheduler is free to move the processes to CPUs as long as the policy is met.

- The

--physcpubind=<CPUs>policy executes the process(es) on the list of CPUs provided:

-----------text07 $ numactl --physcpubind=+0-4,8-12 application.exe

You can also say all, and it will use all of the CPUs. This policy runs application.exe on CPUs 0-4 and 8-12.

- The

--localallocpolicy forces allocation of memory on the current node:

-----------text08 $ numactl --physcpubind=+0-4,8-12 --localalloc application.exe

This policy runs application.exe on CPUs 0-4 and 8-12, while allocating memory on the current node.

- The

--preferred=<node>policy causes memory allocation on the node you specify, but if it can't, it will fall back to using memory from other nodes. To set the preferred node for memory allocation to node 1, use:

----------------text09 $ numactl --physcpubind=+0-4,8-12 --preferred=1 application.exe

This policy can be useful if you want to keep application.exe running, even if no more memory is available on the current node.

To show the NUMA policy setting for the current process, use the --show (-s) option:

$ numactl --show

Running this command on the sample system produces the output in Listing 6.

Listing 6

numactl --show

$ numactl --show policy: default preferred node: current physcpubind: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 cpubind: 0 nodebind: 0 membind: 0

The output is fairly self-explanatory. The policy is default

. The preferred NUMA node is the current one (this system only has one node). It then lists the physical cores (physcpubind) that are associated with the current node, the bound CPU cores (node 0), and to which node memory allocation is bound (again, node 0).

The next examples show some numactl options that define commonly used policies. The first example focuses on running a serial application – in particular, running the application on CPU 2 (a non-SMT core) and allocating memory locally:

-----------text10 $ numactl --physcpubind=2 --localalloc application.exe

The kernel scheduler will not move application.exe from core 2 and will allocate memory using the local node (node 0 for the sample system).

To give the kernel scheduler a bit more freedom, yet keep memory allocation local to provide the opportunity for maximum memory bandwidth, use:

-----------text11 $ numactl --cpunodebind=0 --membind=0 application.exe

The kernel scheduler can move the process to CPU cores associated with node 0 while allocating memory on node 0. This policy helps the kernel adjust processes as it needs, without sacrificing memory performance too much. Personally, I find the kernel scheduler tends to move things around quite often, so I like binding my serial application to a specific core; then, the scheduler can put processes on other cores as needed, eliminating any latency in moving the processes around.

Tool for Monitoring CPU Affinity

Both taskset and numactl allow you to check on any core or memory bindings. However, sometimes they aren't enough, which creates an opportunity for new tools. A good affinity monitoring tool, show_affinity [9], comes from the Texas Advanced Computing Center (TACC).

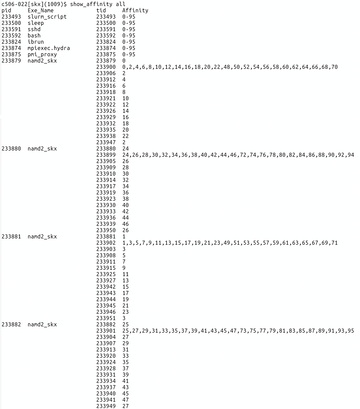

The tool shows "… the core binding affinity of running processes/threads of the current user." The GitHub site has a simple, but long, output example from running the command (Figure 2).

Figure 2: Output of TACC show_affinity tool (used with permission from the GitHub repository owner).

Figure 2: Output of TACC show_affinity tool (used with permission from the GitHub repository owner).

Summary

Today's HPC nodes are complicated, with huge core counts, distributed caches, various memory connections, PCIe switches with connections to accelerators, and NICs, making it difficult to clearly understand where your processes are running and how they are interacting with the operating system. This understanding is extremely critical to getting the best possible performance, so you have HPC and not RAPC.

If you don't pay attention to where your code is running, the Linux process scheduler will move them around, introducing latency and reducing performance. The scheduler can move processes into non-optimal situations, where memory is used from a different part of the system, resulting in much-reduced memory bandwidth. It can also cause processes to communicate with NICs across PCIe switches and internal system connections, again resulting in increased latency and reduced bandwidth. This is also true for accelerators communicating with each other, with NICs, and with CPUs.

Fortunately, Linux provides a couple of tools that allow you to pin (also called binding or setting the affinity of) processes to specific cores along with specific directions on where to allocate memory. In this way, you can prevent the kernel process scheduler from moving the processes or at least control where the scheduler can move them. If you understand how the systems are laid out, you can use these tools to get the best possible performance from your application(s).

In this article, I briefly introduced two tools along with some very simple examples of how you might use them, primarily on serial applications.

Infos

- Multichip Modules: https://en.wikipedia.org/wiki/Multi-chip_module

- Non-Uniform Memory Access (NUMA): https://en.wikipedia.org/wiki/Non-uniform_memory_access

- GkrellM: http://gkrellm.srcbox.net/

- "Processor Affinity for OpenMP and MPI" by Jeff Layton: https://www.admin-magazine.com/HPC/Articles/Processor-Affinity-for-OpenMP-and-MPI

- AMD Ryzen Threadripper: https://www.amd.com/en/products/cpu/amd-ryzen-threadripper-3970x

- First number in the output: https://stackoverflow.com/questions/7274585/linux-find-out-hyper-threaded-core-id

- Taskset command: https://man7.org/linux/man-pages/man1/taskset.1.html

- numactl: https://linux.die.net/man/8/numactl

- show_affinity: https://github.com/TACC/show_affinity

« Previous 1 2

Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.